Artificial Intelligence (AI) has become the backbone of modern innovation – powering chatbots, autonomous systems, medical diagnoses, financial predictions, and even cybersecurity defenses. But as AI grows in capability, it also introduces new attack surfaces and unique vulnerabilities that traditional security models fail to address.

AI security is no longer optional; it is a strategic necessity.

Why AI Security Matters

- Adversarial Threats: AI models can be manipulated with crafted inputs (adversarial examples) that make them misclassify images, text, or even voices.

- Data Privacy Risks: AI models trained on sensitive datasets risk leaking personal or proprietary information.

- Compliance Pressure: With regulations like GDPR, AI Act (EU), and NIST AI Risk Management Framework, organizations must ensure their AI systems are secure and trustworthy.

- AI Supply Chain: Pre-trained models, open-source datasets, and third-party APIs all expand the attack surface.

Key Threats in AI Systems

- Data Poisoning: Inserting malicious data into training datasets to corrupt outcomes.

- Adversarial Examples: Small, imperceptible changes in input that cause incorrect predictions.

- Model Inversion: Extracting sensitive training data by querying the model.

- Model Stealing: Reproducing a proprietary model through repeated queries.

- Prompt Injection (GenAI): Manipulating instructions in large language models to bypass safety.

- Shadow AI / Rogue Models: Employees using unapproved AI tools without security vetting.

Frameworks for AI Security

MITRE ATLAS (Adversarial Threat Landscape for AI Systems)

- Maps tactics, techniques, and case studies for AI-specific attacks.

- Examples: adversarial evasion, poisoning, model extraction.

- Helps organizations build threat-informed defense for AI.

NIST AI RMF (Risk Management Framework)

- Guidance on secure, ethical, and trustworthy AI.

- Includes governance, data integrity, and resilience.

OWASP Top 10 for LLM Applications (2023 – 2025)

- Addresses risks like prompt injection, insecure output handling, and supply chain flaws in large language models.

Tools & Technologies for AI Security

- Adversarial Testing Tools:

- IBM Adversarial Robustness Toolbox (ART)

- Foolbox

- TextAttack

- Model Monitoring & Governance:

- Arize AI, WhyLabs, Fiddler AI (drift, fairness, and anomaly detection)

- MLflow with security audit logs

- Supply Chain Security for AI:

- Guardrails AI, LangChain Guardrails (for GenAI safety)

- ModelCard Toolkit (Google) for transparency

- Cloud-Native AI Security:

- Azure AI Content Safety, AWS GuardDuty for ML, Google Vertex AI Explainability & Security

Role of AI in Enhancing Security

AI doesn’t just introduce risks – it also strengthens security when applied responsibly:

- Threat Detection: ML-powered SIEMs (e.g., Splunk ML Toolkit, Elastic AI Assistant) detect anomalies faster.

- Code Security: AI assistants (e.g., GitHub Copilot Security) flag insecure code patterns.

- Fraud Prevention: AI models spot unusual financial or transactional behaviors in real time.

- Zero-Day Defense: Generative AI helps simulate novel attack paths for proactive defense.

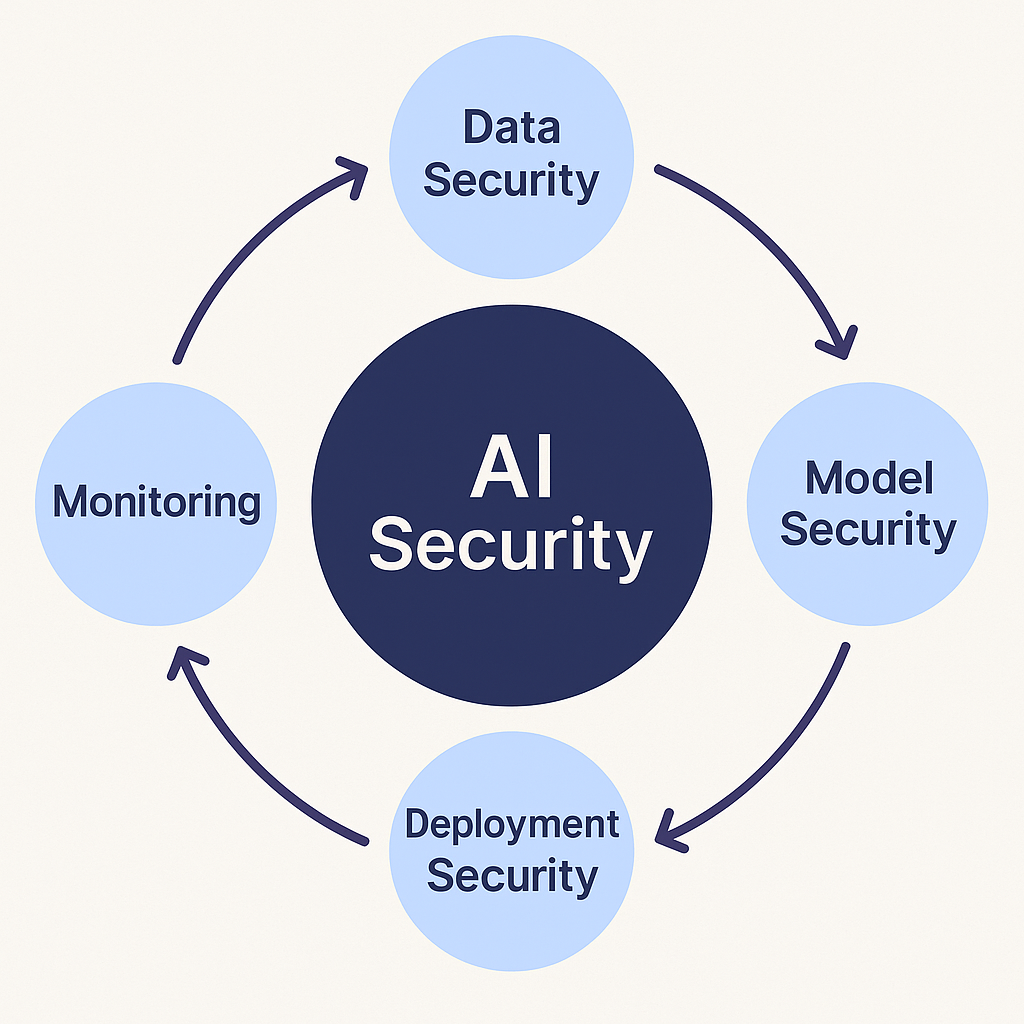

Best Practices for Securing AI Systems

- Secure Data Pipeline: Validate and sanitize training data.

- Robust Training: Use adversarial training to improve resilience.

- Model Explainability: Apply XAI (Explainable AI) tools to detect abnormal decisions.

- Continuous Monitoring: Track model drift, bias, and adversarial attempts in production.

- Policy Enforcement: Adopt Security-as-Code and AI Governance policies.

- Regular Red Teaming: Perform AI-specific penetration testing and red teaming.

- Human-in-the-Loop: Keep human oversight in critical AI decisions.

Final Thoughts

AI is transforming industries, but without robust security and governance, it risks becoming a liability. The future of AI must be secure by design, with integrated frameworks like MITRE ATLAS and NIST AI RMF, automated testing, and continuous monitoring.

AI is both the problem and the solution – if secured wisely, it can defend as effectively as it innovates.

Leave a Reply