In recent years, organisations have matured their software-development practices through models like DevSecOps integrating security (“Sec”) into the development (Dev) + operations (Ops) lifecycle.

Now, as artificial intelligence (AI) and machine-learning (ML) systems become core to business operations, a new discipline is emerging: MLSecOps (Machine Learning Security Operations).

MLSecOps takes the DevSecOps ethos but extends it not just to code, but to data, models, model supply-chains, model monitoring, and the peculiar threats that AI/ML introduces. In this blog we’ll explore:

- Why DevSecOps isn’t sufficient for AI/ML

- What MLSecOps brings to the table

- A lifecycle view: embedding security across the AI workflow

- Key security domains for ML systems

- Practical strategies & tooling you can adopt

- Challenges, organisational readiness & next steps

Why DevSecOps alone isn’t enough for AI/ML

Let’s start by revisiting DevSecOps: the principle is shifting security left in the software-development lifecycle: design, code, test, deployment, operations. Security becomes a built-in responsibility rather than a late‐stage afterthought. This has led to considerable improvements in secure code, automated scanning, dependency management, CI/CD pipelines, infrastructure-as-code security and more. Wiki

But when you build AI/ML systems, several things change:

- You’re not just shipping software code; you’re ingesting data, training models, tuning hyperparameters, deploying models, monitoring drift, retraining, possibly doing online learning.

- The “artifacts” include datasets, pipelines, model weights, embeddings, feature stores, sometimes third-party pre-trained models or large language models (LLMs).

- The risk surface is broader: data poisoning, adversarial inputs, model inversion, model theft, supply-chain compromise of model components, bias and fairness issues. Crowdstrike

- Traditional security tools (static code analysis, OWASP API checks, container scans) will catch many vulnerabilities but may miss ML-specific threats (e.g., a poisoned training dataset, subtle distribution shifts, biased outputs, model extraction).

- The speed and evolution of ML models (e.g., continuous retraining, rapid experimentation) mean the lifecycle is more dynamic, less deterministic than classical software. Help Net Security

Hence: DevSecOps provides a foundation, but to secure AI/ML you need a specialized lifecycle and mindset this is where MLSecOps comes in.

What is MLSecOps?

In simple terms: MLSecOps = integrating security deeply into the ML lifecycle (from data-ingestion to model deployment to monitoring and retraining), making sure the entire chain (data → model → deployment → feedback) is resilient, auditable, trustworthy.

According to CrowdStrike:

“Machine learning security operations (MLSecOps) is an emerging discipline that tackles these challenges by focusing on the security of machine learning systems throughout their lifecycle. It addresses issues like securing data used for training, defending models against adversarial threats, ensuring model robustness, and monitoring deployed systems for vulnerabilities.” Crowdstrike

From another source:

“MLSecOps extends DevSecOps principles into AI and across the machine learning lifecycle. The MLSecOps framework focuses on protecting code, algorithms and data sets that touch intellectual property (IP) and other sensitive data.” Help Net Security

In short: If DevSecOps = security integrated into software dev + ops, then MLSecOps = security integrated into ML dev + ops + model lifecycle.

Lifecycle view: Securing the AI development pipeline

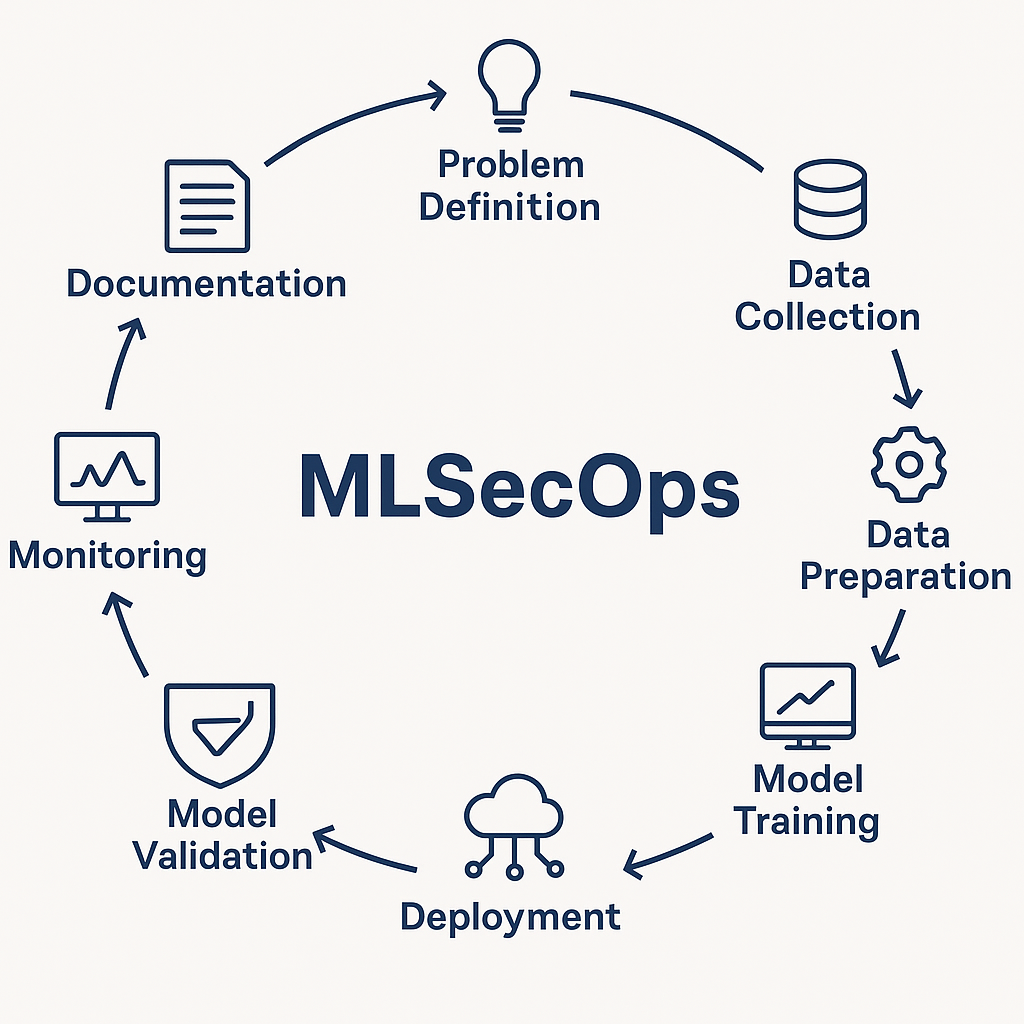

To operationalise MLSecOps, it’s helpful to view the full lifecycle of an ML/AI system and identify where security controls should map. A 10-stage lifecycle (for example) might look like:

- Problem definition & scoping

- Data collection / ingestion

- Data preparation & preprocessing

- Feature engineering / model experimentation

- Model training & evaluation

- Model validation & certification (fairness, robustness, bias)

- Deployment / serving infrastructure

- Monitoring (model drift, anomalous inputs, performance degradation)

- Maintenance / retraining / retirement

- Documentation, governance & audit (model provenance, Bill of Materials)

At each of these stages, there are ML-specific threat surfaces and control needs. One article describes this as:

“This involves integrating security principles deeply into every phase of the AI lifecycle, from problem definition to post-deployment monitoring.” Medium

Examples of stage-specific considerations:

- Problem definition: Are you clear about what the model is supposed to do? What’s the threat model? Could the model be misused? Could a wrong objective lead to unintended consequences?

- Data collection/ingestion: Ensuring provenance of data, verifying datasets aren’t poisoned or manipulated, auditing external data sources, protecting privacy of training data.

- Data preparation: Feature leakage, unintended bias, sanitized vs unsanitised data, inadvertent inclusion of sensitive attributes, data anonymisation pitfalls.

- Model training & evaluation: Ensuring the training pipeline is secure, managing third-party pre-trained models, avoiding adversarial training inputs, checking hyperparameter leakage, logging training metadata.

- Deployment/serving: Securing API endpoints for inference, managing access control, protecting model weights and embeddings, guarding against model extraction and inversion attacks, securing compute infrastructure.

- Monitoring & maintenance: Detecting drift, concept change, adversarial attacks, ensuring that models remain robust over time, auditing decisions, ensuring explainability and fairness metrics.

- Governance/documentation: Maintaining model provenance (who built it, when, on what data), Bill of Materials for ML (data sets, model components, pre-trained modules), audit logs, regulatory compliance (GDPR, etc.).

This lifecycle centric view helps you embed security not just as a firewall at the end, but as a continuous flow.

Key security domains in MLSecOps

Here are some of the major domains and themes in MLSecOps:

1. Secure Data Management

Data is the fuel of ML, and if the data is compromised, the model will be too. Threats include data poisoning (tampering training data), data leakage (sensitive info exposed), supply-chain of data (third-party data sources), feature bias, and provenance issues. Crowdstrike

Mitigations include: strong data governance, hashing/verifying datasets, role-based access, anonymisation or differential-privacy techniques, logging data lineage, third-party data vetting.

2. Model & Algorithm Security

Models can be attacked: adversarial inputs, model inversion (extracting training data), model stealing (IP theft), target-distribution shift, model tampering. openssf.org

Mitigations: adversarial testing, robustness evaluations, watermarking models, encryption of model artifacts, access control for model assets, monitoring for abnormal behaviour.

3. Supply Chain / Component Provenance

Just like software has dependency vulnerabilities, ML systems have dependencies: pre-trained models, data sets, feature libraries, embedding models, toolkits. These can bring in malicious code or backdoors. mlsecops.com

Mitigations: Machine Learning Bill of Materials (MLBoM), verifying component provenance, scanning for known vulnerabilities in libraries, applying supply chain security frameworks (c.f. SLSA for software). openssf.org

4. Infrastructure, Deployment & API Security

Serving ML models often involves APIs, microservices, cloud/edge infrastructure. That means you need to protect infrastructure the same way you protect software, but also consider ML-specific risks (e.g., inference API abused to extract model info). crowdstrike.com

Mitigations: secure CI/CD pipelines for ML, container security, network segmentation, API authentication/authorization, rate-limiting, logging of model API usage, monitoring for spike/anomaly behaviour.

5. Monitoring, Drift, Explainability & Fairness

Unlike static software, ML models degrade over time (drift), face changing distributions, and may make biased or unfair decisions. MLSecOps must include mechanisms for continuous monitoring and governance. Medium

Key practices: model performance monitoring, bias/fairness metrics, explainability frameworks (model cards, datasheets for datasets), audit logs of decisions, trigger for retraining or shut-down when necessary.

6. Governance, Risk & Compliance (GRC)

As AI/ML use becomes regulated (GDPR, forthcoming AI regulations in many jurisdictions), you need governance frameworks, auditability, transparency, traceability. MLSecOps addresses this domain explicitly. mlsecops.com

Practices: maintaining MLBoM, model cards, dataset datasheets, documenting lifecycle steps, having security review gates, aligning with internal/external regulatory standards.

Practical Strategies & Tooling

Here are some actionable recommendations for organizations looking to implement MLSecOps:

- Shift Left for ML: Just like in DevSecOps, bring security in early when you define the problem, choose the data sets, design the model architecture. Don’t wait until after deployment. Help Net Security

- Cross-Functional Teams: Involve security, ML/data scientists, operations, product owners collaboratively. Security should not be siloed.

- Build an ML Security Architecture: Define reference architectures for your ML stack (data ingress, feature store, training pipeline, model registry, serving layer). Overlay security controls.

- Automate Where Possible: Automate scanning of datasets (for anomalies, bias), automated tests for adversarial robustness, integrate model governance tools into pipelines, automated alerts for drift.

- Maintain Model Provenance and Bill of Materials: Track data origins, model versions, pre-trained components, third-party dependencies. Use MLBoM. openssf.org

- Apply Threat Modelling to ML: Use frameworks that map ML-specific threats (e.g., input manipulation, membership inference, adversarial attacks) to lifecycle stages. openssf.org

- Secure the Infrastructure & APIs: Make sure the serving infrastructure is hardened. Protect against API abuse, access control, model extraction.

- Monitor & Maintain Continuously: Set up monitoring of models in production: input distributions, output anomalies, latency, drift, fairness metrics. Have incident response playbooks for ML failures.

- Governance & Audit: Document the lifecycle, decisions, biases, drift. Include review gates, versioning, rollback strategies, model retirement policies.

- Training & Culture: Educate data scientists, ML engineers, operations teams on ML security risks not just traditional software security. Many ML practitioners may not have security training. openssf.org

Challenges & Considerations

While MLSecOps is essential, implementing it has its challenges:

- Complexity & Novelty: ML systems are more complex than typical software systems; many organisations don’t yet have mature ML governance.

- Lack of tooling maturity: While DevSecOps has many established tools, ML security tooling is still emerging.

- Cultural and skill gaps: Data scientists may not be trained in secure development practices; security teams may not deeply understand ML workflows.

- Dynamic evolution: Models evolve, data drifts, adversaries adapt security has to keep up.

- Regulation & ethics: Governance for fairness, bias, explainability may not yet be business standard.

- Cost vs benefit: Organisations may view ML security as an overhead; need to demonstrate ROI (risk reduction, compliance, trust).

The Way Forward: From DevSecOps to MLSecOps

- Start with your ML-pipeline: map out your current ML lifecycle (data → model → deployment → monitoring).

- Perform a gap assessment: where do you currently apply security? Where are the blind spots?

- Educate your teams: host training sessions for data scientists, ML engineers, security ops on ML-specific threats.

- Define MLSecOps processes: security review gates for data ingestion, model training, deployment; incorporate model-specific threat modelling; define monitoring & incident response for ML.

- Adopt enabling tooling: e.g., model registries, dataset versioning, logs for model serving, adversarial robustness testing.

- Govern and document: maintain model/ dataset artefacts, Bill of Materials for ML, model cards, dashboards for bias/fairness/ drift.

- Iterate and monitor: security is never “done”. As models evolve and new threats emerge (especially in generative AI/agentic AI), MLSecOps must evolve. protectai.com

Why this matters now

With AI/ML systems increasingly embedded into business-critical processes (fraud detection, autonomous decisions, content moderation, predictive analytics), the risk surface has expanded. A breach, misuse or malfunction of a model can lead to reputational damage, regulatory fines, biased or discriminatory outcomes, IP theft, or incorrect business decisions. MLSecOps helps organisations manage that risk.

In addition, regulators and stakeholders are paying closer attention to AI trustworthiness, governance, transparency and fairness. Having a mature MLSecOps practice helps build trust among customers, partners and regulators.

Conclusion

The journey from DevSecOps to MLSecOps isn’t just a semantic shift it represents the imperative to rethink how we build, deploy and maintain secure systems when those systems include learning, evolving models, vast datasets and complex inference pipelines. By treating security as integral across the ML lifecycle not as an after-thought organisations can build AI/ML systems that are not only powerful and performant, but also trusted, resilient, and safe.

If you’re embarking on (or already running) AI initiatives, now is the time to ask: “Do we have MLSecOps practices in place?” and if not, how soon can we put them in place?

Leave a Reply